ADHOC - Astrophysical Data HPC Operating Center

The ADHOC data center is part of a larger project, called STILES (Strengthening the Italian Leadership in ELT and SKA), an initiative funded by the National Recovery and Resilience Program (PNRR) that aims to strengthen Italian leadership in the exploration of the Universe.

The main objective of the STILES project is the development of laboratories and instruments for the two largest ground-based telescopes of the next decades, the European Extremely Large Telescope (E-ELT) and the Square Kilometer Array (SKA), as well as for other national and international astrophysical projects.

Coordinated by the National Institute for Astrophysics (INAF) in collaboration with seven Italian universities (University of Bologna "Alma Mater Studiorum"; University of Milan; University of Naples "Federico II"; University of Palermo; University of Rome "La Sapienza"; University of Rome "Tor Vergata"; University of Catania), the STILES project aims to:

- Invest in information technologies, acquiring essential hardware and software infrastructures for the development of new instruments and data analysis, including a high-performance computing centre and AI-based software tools;

- Create national infrastructures for instrumentation verification, offering general services and multipurpose facilities for the characterisation of innovative instruments and methodologies;

- Implement a unique scientific and educational programme, including science-focused doctoral and post-doctoral programmes with ELT, SKA and their synergies with other international observational instruments, in order to support the careers of young researchers.

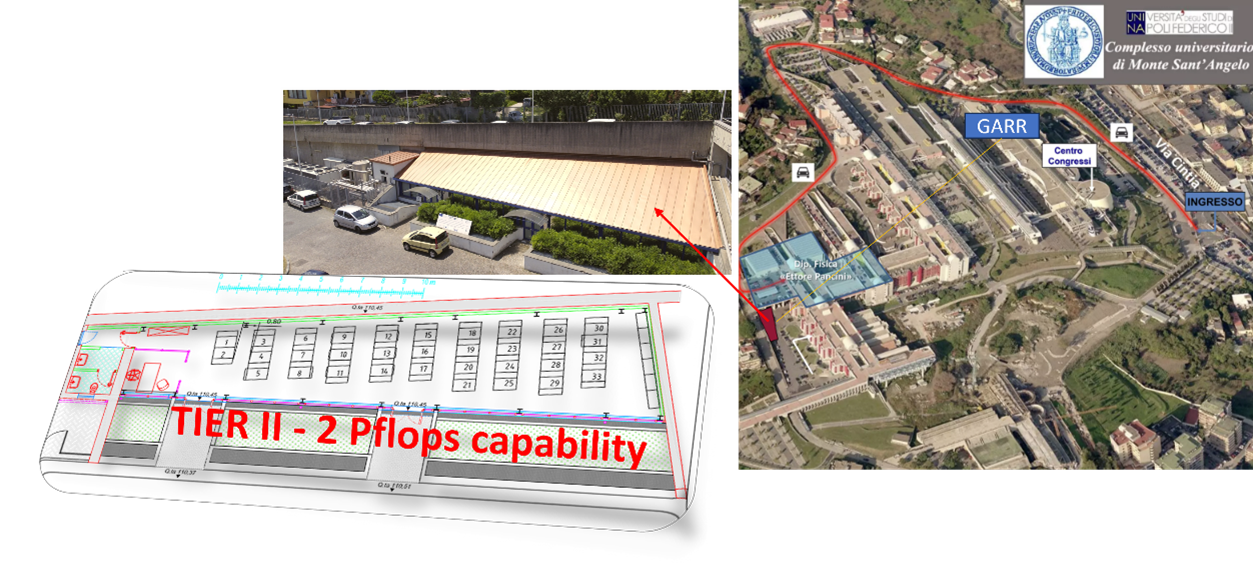

Location of the ADHOC data center within the University Complex of Monte Sant'Angelo

Resources and Services

ADHOC is a Tier 2 computing infrastructure, integrated into a pre-existing computing center, called DC1, with a steady state computing capacity of 2 Petaflops, equipped with:

- a total storage system of 20 Petabytes (PB) "raw", divided into two systems: (i) 8 PB of long-term storage on tape (two units, respectively, of 6 PB located at the data processing center of the INAF headquarters in Bologna, and of 2 PB at DC1); (ii) 12 PB of "hot" storage on disks, divided into 4 logical units of 3 PB each and managed by dedicated front-end servers;

- a computing system, divided into two subsystems, respectively, HPC (High Performance Computing) and HTC (High Throughput Computing), logically divided into three modular clusters of different sizes. The HPC system includes 34 dual-processor servers, of which 22 are equipped with dual H100 GPU cards and 12 with dual L40 GPU cards, dedicated to high-performance parallel computing. The HTC system includes 10 dual-processor servers, dedicated to multi-processing calculation (MPI) and partly to the management and interfacing of the various devices composing the entire infrastructure. Another 3 additional HTC/HPC servers are instead separated from the clusters and dedicated to specific projects and services.

- a data network and monitoring system, connected on a redundant 10 Gb/s line to the GARR node of the CSI (University Center for Information Technology Services), located in the same University Site. The data network architecture is composed of various sub-networks, respectively, at 10/25 Gb/s for interfacing and management/monitoring of the infrastructure, at 100 Gb/s for interfacing with the storage system and HTC, at 200 Gb/s for infiniband connections with the HPC system.

From a functional point of view, the ADHOC data center is composed of three clusters of computing and storage resources, of variable size, intended for incremental and differentiated use. The three clusters are called newton, fermi and einstein, and refer, respectively, to the three clusters of small, medium and large size.

The clusters are equipped with advanced tools for resource management and optimization:

- User management and authentication with FreeIPA;

- SLURM job scheduler for efficient resource allocation;

- Parallel computing with OpenMPI;

- Acceleration on NVIDIA GPUs of different size and computational power;

- Shared filesystem based on different protocols;

- Support for Python and scientific libraries for data analysis and simulations.

The Team

Coordination and Management

System Administration

Security and Networking Consultance

Dr. Davide Michelino

Dr. Bernardino Spisso

The Team also includes several collaborators with experience and scientific and engineering background in the fields of AI, data science, Astrophysics and ICT.

Access and Support

The access to the services and resources offered by the data center is achieved by completing and submitting an online form and is regulated by a specific usage policy.

- Policy Document: click here

- Online form for request of service access: click here

- For more information and requests: astroservice@unina.it